Is Your NHS Trust AI-Ready?

The evolution of artificial intelligence (AI) will reshape the delivery of healthcare, offering opportunities for NHS trusts to enhance patient care, streamline operations, and improve outcomes. However, embracing AI is not a simple, one-size-fits-all process. It requires an organisation to be ‘AI-ready;’ a level of preparedness for AI integration that spans multiple facets of a healthcare organisation’s digital strategy.

The digital maturity of secondary care providers in England is mixed.

For 2024, average digital maturity sits between 2.4 and 2.6 out of 5 based on NHS England’s Digital Maturity Assessment scores.

NHS England's Digital Maturity Assessment (DMA) framework is primarily designed to evaluate the digital capabilities and readiness of healthcare organisations, such as NHS trusts, in terms of their infrastructure, technology adoption, and digital transformation.

The assessment, which is based on the seven success measures of ‘What Good Looks Like’, helps care providers gauge how well they are implementing digital tools, such as AI, to improve patient care and operational efficiency.

This article outlines the questions NHS trusts should consider when assessing their AI maturity and identifying what is needed to become AI-ready. We also highlight the significance of digital strategy alignment, ethical considerations, and adopting the correct infrastructure, in ensuring AI adoption is effective, deployed responsibly and future-proofed.

What is AI Maturity?

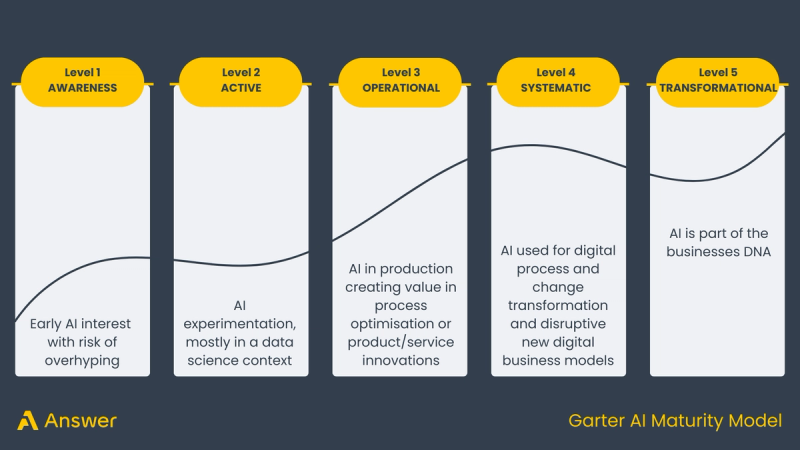

AI maturity refers to the level of preparedness an organisation has for implementing AI solutions in its clinical pathways and operational workflows. It encompasses foundational elements like data infrastructure and governance, through to monitoring advanced deployment of AI systems that can significantly improve clinical and administrative outcomes.

NHS trusts should evaluate their AI maturity as part of their broader digital transformation strategy, aligning it with the specific challenges they face in areas like patient care, waiting times, and operational efficiency.

Six key areas for AI Maturity

To help NHS trusts assess their AI readiness, here are six key areas for self-assessment. Each section includes essential questions that will guide trusts through the AI maturity curve, helping them understand where they currently stand and what steps are needed to become AI-ready.

Priorities and strategy

One of the first steps to becoming AI-ready is understanding your organisation's digital priorities. AI should be deployed to address the most pressing challenges facing your trust, whether that be managing waiting lists, improving diagnostics, or enhancing patient outcomes.

Key questions:

- What are the main challenges your trust is currently facing

- How do these challenges align with the priorities of your trust’s digital strategy?

- Is there an existing AI strategy in place, and does it focus on addressing the primary issues identified?

- Do you need help understanding what models exist to help with your key challenges?

All NHS organisations are under pressure, and investment in new technology isn’t a panacea. There are over 200 CE marked AI applications on the market, and it can be daunting knowing where to start. It is also important to consider that evidence generation demonstrating the efficacy of models for various specialisms is in its infancy.

Organisations like NICE highlight models that are recommended for use while evidence gathering is ongoing.

Tip: Pick one or two key challenges your teams are facing. For example, it might be that you have a bigger backlog of patients waiting for treatment on one or two pathways compared to others.

Start here, and identify a number of models that are designed for these specific pathways and problems. A focused AI strategy aligned with key challenges can ensure that AI adoption delivers tangible value, rather than becoming a solution in search of a problem.

How is the development of AI as part of your digital strategy being funded?

All digital investment projects require funding, and we’ve seen examples of funding being made available to accelerate the deployment of AI, only for it to be withdrawn further down the line. However, there are a number of options open to trusts, including UK Research and Innovation, Innovate UK, Small Business Research Initiatives, NIHR i4i, and of course national and regional funding streams as well as locally-held budgets.

Tip: It is possible to partner with private organisations like Answer Digital which can help mobilise the NHS, academic and model stakeholders needed for successful bids.

As funding is limited and timebound, it is important to consider where this is best allocated to support your AI strategy.

For example, consider carefully how your approach makes the best use of limited resources and funding to enable multiple trials of models across your key clinical specialities both now and in the future.

Information architecture

A key technology infrastructure consideration of any AI strategy is whether to deploy a cloud-based AI solution, or on premise. Following a public cloud-first approach aligns with the NHS’ architecture principles and many trusts are moving towards cloud-native enterprise imaging systems for the increased security, scalability and ease of access to data that they enable.

Fully on-premise deployments can prove particularly costly due to the expensive hardware needed to run AI models at the speed required for clinical care. In the future, it is likely organisations in the same region will use multi-tenanted cloud implementations based on a single regional instance that several trusts and academic institutions connect to.

Key questions:

- Where does AI sit within your current digital infrastructure?

- Does your trust operate a cloud-first, hybrid, or on-premise strategy, and is it flexible enough to integrate AI?

- Is your Picture Archiving and Communication System (PACS) cloud-based?

- Is your Picture Archiving and Communication System (PACS) shared with other organisations?

- How adaptable is your digital infrastructure to accommodate changes in technology?

Tip: Embracing a cloud-first approach will bring your trust in line with the preferred approach of many AI applications. While this is an obvious choice for trusts that have already adopted a cloud strategy for their PACS and RIS systems, the truth is that many trusts have not yet taken this step.

If you’re one of those trusts, deploying an on-premise pseudonymisation service instils confidence by ensuring that patient data remains protected within the trust's boundaries, whilst allowing you the benefits of inferencing the AI in the cloud. This hybrid approach still allows you to tap into the full range of AI solutions available in the market whilst protecting patient data.

Governance and ethics

The ethical considerations for AI in healthcare cannot be overstated. NHS trusts need to ensure that AI systems are implemented in a way that is safe, transparent, and accountable, protecting both patients and data.

Key questions:

- Do you have the right governance structures in place for AI oversight to help move from incubation to deployed within clinical pathways?

- Are the right people in your organisation empowered to influence AI strategy, with sufficient knowledge to guide decision-making?

- Are ethical committees actively engaged in AI implementation?

- How is data protection being managed to ensure privacy and security?

A robust governance framework, coupled with a strong ethical oversight process, is critical to ensuring AI is implemented safely and effectively.

Tip: AI models should be interpretable to clinicians, patients, and decision-makers. This means NHS organisations must be able to explain how the AI system arrives at its decisions, especially in critical areas such as diagnostics or treatment recommendations. This should include documenting the decision-making process, involving multi-disciplinary teams, and ensuring clinical oversight.

Culture

AI readiness is not just about technology; it’s about people. NHS trusts must ensure their workforce, particularly clinical teams, are ready and willing to work with AI. Fear or misunderstanding of AI can hinder adoption and limit its potential benefits.

Key questions:

- Do your clinical teams understand how AI can help them in their roles?

- How are AI outputs and their role in clinical workflows included in your education programmes?

- Is there a fear of AI among your clinical workforce, and is this being addressed?

Do you have clinical champions who can advocate for AI development and help integrate it into clinical practice?

Tip: Building a culture of innovation and trust around AI is a critical step in ensuring its successful adoption. Actively involve clinicians, patients, technologists, and ethicists in the decision-making process from the outset. Provide education, create feedback loops, regularly share information about how AI solutions are being evaluated, and demonstrate a strong commitment to ethical use.

Implementation

Deploying AI solutions into clinical workflows is a significant challenge for both the clinical and operational teams who evaluate them before building them into clinical workflows, and the digital teams responsible for managing and maintaining them once rolled out. A ‘race to the bottom’ on implementation pricing by model vendors risks devaluing the outputs and overlooking the hearts and minds approach needed to help clinical teams embrace AI.

Key questions:

- What is your AI vendor strategy, and are you able to evaluate a wide enough range of options?

- Are the AI models being trialled aligned with the trust's main challenges, such as reducing waiting times or improving diagnostics?

- Are you effectively utilising national, regional, and local funding for AI initiatives?

- Do you know how your chosen model vendors will support implementation, and how this is priced? (beware of hidden costs)

Tip: An open AI deployment platform approach enables an organisation to run multiple AI models in shadow mode before moving to full commission. Each model can first be thoroughly tested in a standardised approach before integrating directly back into live clinical workflows.

With a multi-vendor P2P integration approach evaluation is more time consuming due to the upfront investment in resources. When evaluating different model vendors and their implementation approach, be clear on what support the vendor will give you.

Maintenance, operations and value realisation

NHS trusts must continuously evaluate AI systems to prove their effectiveness. And even though more evidence is needed to prove the full efficacy of AI, progress will continue at pace. Therefore it is essential the right architecture and infrastructure is created now before models are woven into many clinical and operational workflows.

With competition for limited budgets, NHS trusts need to ensure they have processes in place to measure the value AI delivers. This will guide future investments and ensure AI initiatives remain sustainable and impactful.

Evaluating AI impact is not simple and NICE believes more work needs to be done to justify long term investment in models. In October 2024, NICE released a new reporting standard for health economic evaluations of AI technologies, called CHEERS-AI. Any evaluation should look at more than just how the model has performed and take into account the impact on the wider clinical service.

Such as:

- Has it increased or decreased clinician workload?

- Is it impacting clinicians’ confidence in their interpretations

- Are junior clinicians getting the required exposure without AI to build up their skill level?

- Is the model performance increasing false positives and leading to unnecessary and costly treatment (such as an increase in CT scans following the initial x-ray)

The benefits analysis of an AI deployment is wide ranging. If done right it will help the trust, and wider NHS to adopt the models that have the greatest positive impact on patients and the clinical services they interact with.

Key questions:

- How are you evaluating the effectiveness of AI models in real-world use?

- Are there processes in place to ensure AI models continue to function as intended, especially as circumstances and data evolve?

- How will you conduct post market surveillance of your deployed AI models to help benefit realisation and drive future investment?

- Are there processes in place to capture the value generated by AI?

- How is this data informing future AI investments?

Tip: A platform approach to the deployment of AI applications enables a holistic, consistent and scalable monitoring solution for the variety of applications deployed. This supports continued innovation by providing the ongoing clinical assurance that models are safe and scalable.

While the statutory onus is on AI model vendors to ensure the efficacy of their models, having a platform that continually monitors deployed AI in near real-time puts the power back in the NHS trust’s hands.

By monitoring AI models as they evolve over time, using a platform approach makes it easier to check they’re performing as they should, while defending against unintended bias.

NHS trusts that effectively conduct an AI readiness self-assessment will be better equipped to integrate AI into their digital strategies. By understanding their current level of AI maturity, trusts can take actionable steps to address gaps, align their strategies with broader NHS digital goals, and ensure AI delivers real, measurable improvements to patient care and operational efficiency.

Being AI-ready isn’t just about implementing cutting-edge technology; it’s about aligning AI with the trust’s priorities, ensuring ethical governance, cultivating a supportive culture, and continuously realising value from AI investments. By following the steps outlined in this article, NHS trusts can position themselves to not just adopt AI, but to thrive with it as part of their digital future.

If you’re a trust or imaging network that is looking to build your AI strategy, source funding for your AI strategy, or looking to deliver your funded strategy to meet national targets, we can help.

Get in touch with our friendly expert AI team today.